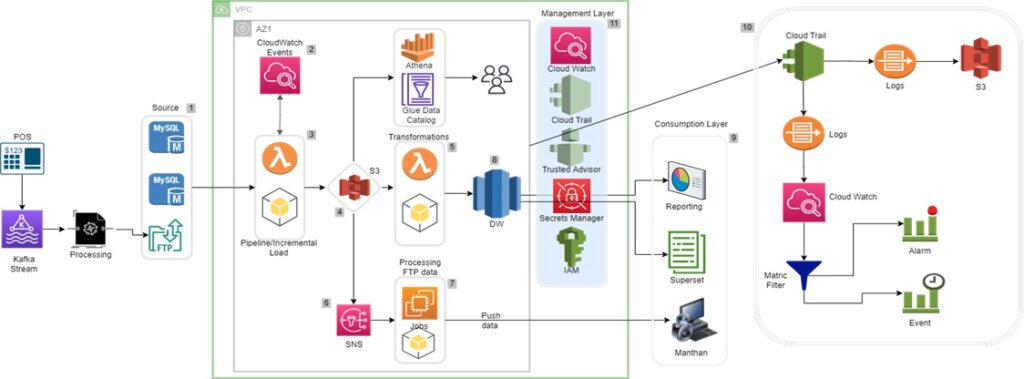

Proposed Architecture in AWS

Their POS data is fed into the system with the help of Kafka. Files are copied into FTP site.

- Data Source – data from multiple MySQL DB and FTP (XML format).

- Time based rules to trigger the ingestion process.

- Lambda with Python to fetch the incremental data.

- Landing zone S3, all the data is pushed in its original format.

- Transformation & Validation using Lambda and Python.

- Notification – SNS, as soon as new file from FTP arrives in S3 it will trigger the further processing.

7. Read, transform & copy data to Manthan.

8. Central DW – Redshift, Clean, ready to use data stored as a normalized dataset.

9. Consumption layer – data could be used for various purposes.

10. Logging, historical logs are saved in S3 and different matric are used to either trigger an event or alarm

11. Management layer, for security measures passwords and access keys are stored in Secrets Manager. User access and different AWS service access is maintained via IAM & for logging Cloud Trail and Cloud Watch is configured